🔥 Commentary: Unpacking AI skepticism

The vibes around AI are decidedly skeptical if not outright hostile in some online spaces, and there seem to be a few reasons why

A few weeks back, I wrote a post on how Americans feel about AI, citing recent YouGov data that tells a decidedly mixed story. On the one hand, there were signs that Americans are feeling cautious and wary about the technology. On the other, more people tended to think that it would improve their lives than thought it would hurt their lives.

Big picture data like that is valuable, of course, but it doesn’t articulate the full breadth or depth of a complicated topic like this one. If you spend a lot of time on the internet and care about this topic, you’re probably aware that AI skepticism has been fully embraced by some online spaces. Being an AI optimist but also a researcher has made me curious to learn more about that skepticism.

Among the online spaces I sometimes linger, nowhere is these strains of thinking more evident than on Bluesky, the recently-emergent microblogging platform for Twitter/X refugees who (understandably) have no desire to use Meta’s Threads. In my experience, the vibes towards AI on Bluesky have always hovered somewhere between willful ignorance and outright disdain. Mark Cuban, of all people, discovered this in a pretty clear cut way earlier this week when he shared this post:

The responses were what anyone paying attention to the tenor of conversation about AI on Bluesky might’ve predicted. It was basically a lot of this:

To Cuban’s credit, he handled the blowback with a good degree of openness. But the tone of responses to his initial post was so clear that he felt compelled to follow up later, sharing his bemusement:

So what’s going on here? There seem to be a few factors driving AI skepticism, each of them blending into one another in various ways. Posts like the responses that Cuban got on Bluesky tend to display one or more of the following, in my view:

Politically-minded distrust: left-leaning people who associate AI with the newly-rightward leanings of Silicon Valley and hate it by association (this could also be called an anti-billionaire/anti-Tech/anti-VC dimension); people in this bucket don’t dismiss AI’s technological potential— in fact they acknowledge it— and some worry about job loss for humans and the rich getting richer and poor getting poorer from it

An anti- non-deterministic software dimension: folks who cite hallucinations from LLMs and bizarre media outputs (photos, videos, songs, etc.) as evidence that AI is fundamentally flawed as an approach to building useful software; these folks dismiss AI wholesale because they think it’s nothing but smoke and mirrors

A moral dimension: people who are angry that models have been trained without permission from creators, rights holders, etc. and are opposed to using them at all as a result

A fool-me-once dimension: people who think AI is simply the latest hype cycle for VCs and rich people to pump before they dump, having made their money and leaving behind a landscape of unfulfilled promises; while overlapping with politically-minded distrust, this dimension seems distinct to me, and is rooted more in a cold skepticism than a political worldview

If you accept that there’s some truth to each of these as motivations for AI skepticism (and maybe you don’t!), there’s a lot to unpack here. Some thoughts on the first two buckets:

Politically-minded distrust

For one, it does seem to me that AI as a field, an idea, a bogeyman, or even just a meme now finds itself firmly engulfed in the culture wars. And while it’s certainly not true that all people on the left are AI skeptics (👋), it does seem to be true that many of the strains of AI skepticism seem to have a leftward ideological underpinning.

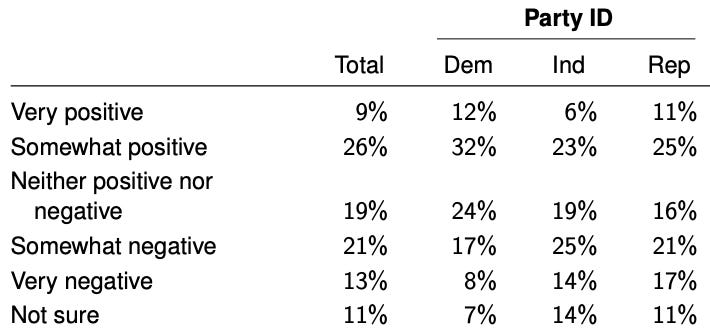

Interestingly, though (and to play devil’s advocate against this purely vibes-based observation), I don’t see a lot of evidence that the converse is true: I don’t know that right-leaning people tend to be more enthusiastic about AI than those on the left. In fact, if you look at the political party breakdowns for the YouGov data I mentioned earlier, there doesn’t seem to be an overwhelming political divide at all:

So what to make of what seems to be politically-oriented AI skepticism emanating from the left? I think there are two possibilities:

I’m wrong and it’s not very common. Anyone who knows me well knows my penchant for making terrible predictions— it’s why I try not to make many of them. It’s definitely possible that I’m just viewing the world through an extremely potent filter when reading Bluesky. (That much, in fact, is certainly true.)

I’m right and it just hasn’t shown up in the national data yet. Saying this dynamic only shows up through a filter bubble doesn’t discount the existence proof that each of Cuban’s negative replies provide. The sentiment is definitely real— it’s just a question of how common it is. My strong suspicion is that there’s growing momentum behind AI skepticism on the left given the cultural and political moment we find ourselves in, and I’d be surprised if the numbers don’t eventually show it.

Anti- non-deterministic software

This is a clumsy name for what I think is the most interesting dimension driving AI skepticism. What’s fascinating to me about is that it seems grounded in a view that the outputs from software and computers are supposed to be objective, logical, predictable and correct in some sense by their very nature.

That, of course, is just not true of generative AI— hallucinations are real, mistakes are real, poor quality outputs of all types are real. So these folks aren’t wrong. But they do seem unwilling to accept a world where we engage with computers more like we engage with other people: knowing that what we hear from people may have inaccuracies, be driven by ulterior motives, etc., but at the same time having a corpus of signals to fall back on that helps us make sense of that information nonetheless: is this person credible overall? Are they credible on this topic? What might they want me to do with this information? How much credence should I give it as a result?

The shift from deterministic interactions with software to probabilistic ones is profound, and for some people, one bad experience with an LLM or other type of model or tool will damage the credibility they ascribe to AI for a long time. Compounding this challenge is the fact that, as with any tool (or as with interacting with people!), it takes trial and error, experience, and developing your own set of heuristics to understand how best to use AI. Without a willingness to engage, experiment, and learn, many people will see bad outputs and discount the whole field forever. Ethan Mollick referred to this gap recently as “the jagged frontier”:

Last week, I wrote about a paper that showed evidence that people who tend to avoid uncertainty are more likely to project feelings of “creepiness” onto technology that seemingly knows more than the person wants it to about them. Those feelings tend to feed into a broader pattern of distrust and skepticism towards those types of technology and, sometimes, lead to conscious decisions to avoid engaging with them altogether. I wonder if a similar pattern could play out with AI skepticism.

It’s good to have healthy skepticism of new technologies, of course, especially during hype cycles like the one we’re in with AI. And any new technological wave creates ripples of people who reject it outright, question its value, or want it to play no role in their lives. That’s all fair and even good. My suspicion, though, is that the value of AI is so profound, and it will eventually reach into so many disparate parts of our lives, that skeptics will face a choice that many other massive technological waves like the internet, smartphones, or social media have forced us all to make: accept its role in your life in some measure, or miss out on a difficult-to-measure-but-obviously-very-real set of benefits.

All of this, of course, would make great fodder for real research instead of me spouting nonsense in a commentary post. Perhaps we’ll do just that in a future research project from The Understanders. In the meantime, I’d love to hear your take: what am I missing about AI skepticism? What research have you seen about it? I’m ready, as always, to be proven wrong on any of this stuff.